Code of Music

Harmony

Eerie Drones

Ideas

Educational Chord Generator I really liked David’s project in class last week - It made exploring basic synthesis settings super accessible with all components randomly generated with a single button press and then displayed to the user (if they ask for it). A computer interface with a ton of knobs (let alone an analogue synth) can quite intimidating for novices, and so David’s implementation lowers the barriers for creative play. He even integrated a keyboard so that a user could then experiment with their synth. How would a similar interface look for chord progression/harmony?

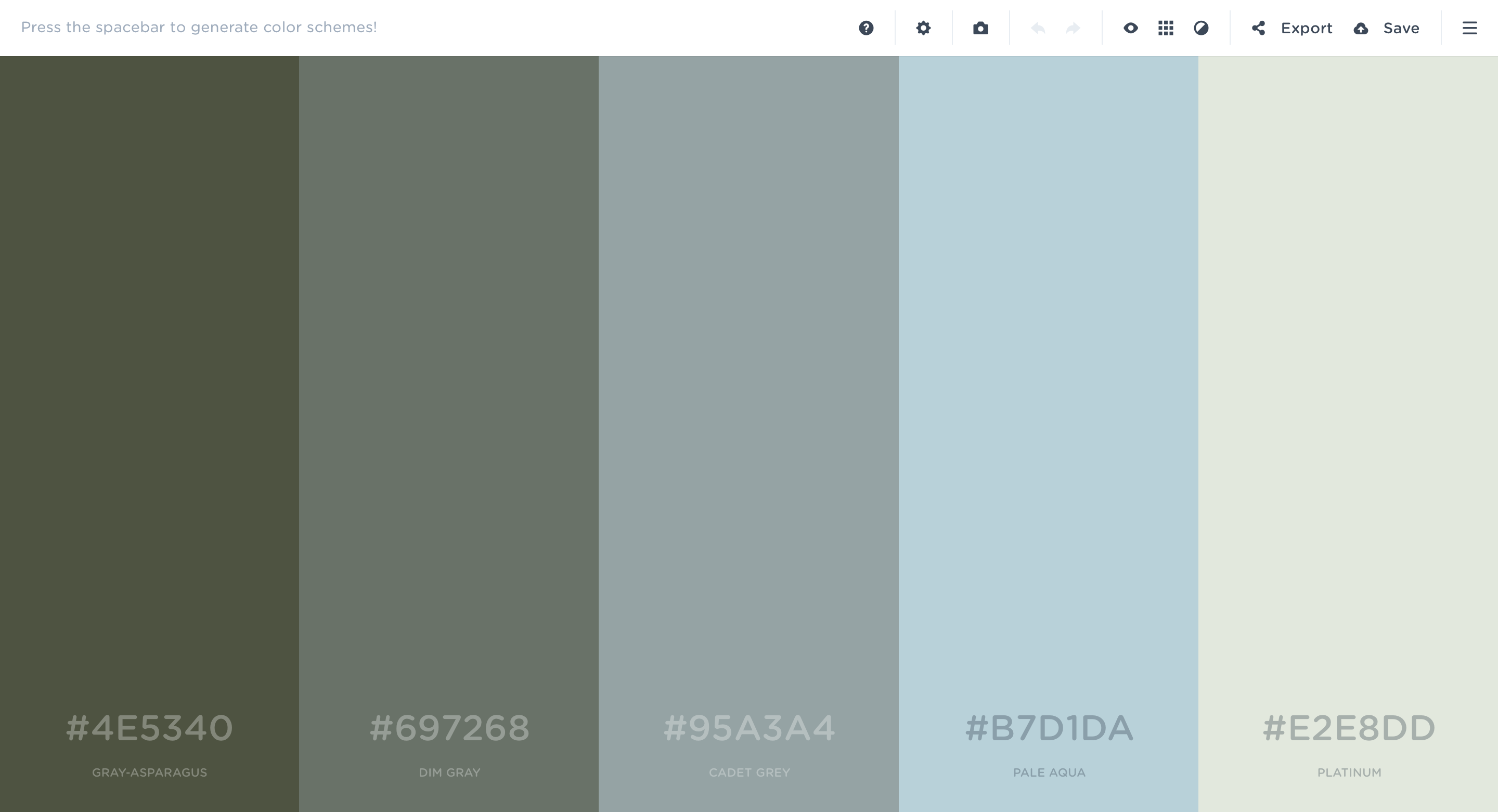

I think the interface for Coolors is excellent in helping one generate color palettes - It begins with a random color selection, but then allows the user to lock and/or tweak the colors they like. I envisioned a similar interface with pitches instead of colors that began by generating a random number of pitches then allowed the user to lock in the ones they leak or tweak one holding other notes constant. It would print the chord that the user has created au An added feature could save the chord into a user’s backpack to aid them in generating chord progressions (with automatic transposition).

Drones I have a tendency to put a whole lot of hours into these projects and the above idea seemed hard to pull off in a week. The next idea that has been floating around in my mind is an automatic drone generator. I play electric guitar and practice soloing all the time - it would be cool to have a generative background sound locked into the key I am playing in.

My goal was to merge some of the ideas from past projects. I imagined vertical lines similar to the first assignment, tones that die out over time similar to the melody assignment requiring constant user input to keep producing sound, and a similar interface with clicking to activate and button presses to

Implementation

The app created is largely how I envisioned it. Through a single button interface, the user adds new pitches to the overall texture. They are accompanied by vertical lines that rise up the screen as long as the pitch is audible, and their horizontal position reflects their panning. The instrument is mostly in A Minor with diatonic pitches more likely to be produced than chromatic pitches.

The actual pitches are decided through weighted probabilities. In synth.js, I declare the following objects:

let note_probs = [

{pitch: 'A', prob: 0.2},

{pitch: 'A#', prob: 0.22},

{pitch: 'B', prob: 0.3},

{pitch: 'C', prob: 0.4},

{pitch: 'C#', prob: 0.44},

{pitch: 'D', prob: 0.55},

{pitch: 'D#', prob: 0.57},

{pitch: 'E', prob: 0.77},

{pitch: 'F', prob: 0.87},

{pitch: 'F#', prob: 0.9},

{pitch: 'G', prob: 1}

];

let octave_probs = [

{octave: 2, prob: 0.05},

{octave: 3, prob: 0.25},

{octave: 4, prob: 0.45},

{octave: 5, prob: 0.65},

{octave: 6, prob: 0.85},

{octave: 7, prob: 1}

];

A later function generates two random numbers, checks where they fit along the weighted probability list, and adds the results together. For example, the values 0.7 and 0.3 would result in E4. Every time a button is pressed, a totally new Tone.Synth() is spawned with random settings ranging from duration to timbre along with a random pitch. I played a lot with timbre and ended up using a slow random vibrato to create some eerie pitch variation, and heavy use of reverb to support the atmosphere. To be honest, this is the most “hacked-together” assignment so far as I spent a lot of time experimenting with different effects and ranges controlling them, and some of the values might seem arbitrary on first glance.

Challenges

I had the most issues getting this project to work than any others. It mainly had to do with amplitude and timing problems in spawning synths. Generating this many synths quickly becomes way too loud - especially The problem is assuaged somewhat by using a lowpass filter at the end of the signal chain. But, this alters the tone of all sounds, and still doesn’t work reducing overly loud midrange synths (which is still a problem). Is there a better approach to creating random pitches and timbres that keeps a somewhat consistent volume among synths?

Worse than that, I think there is a bug in Tone’s ADSR implementation (or a lack of understanding on my part). No matter what value I set, the release was always super short. This was problematic because my intention was to create long tones that fade in and out. It also made it harder to get the tones to sync up with the visuals I had created. This was true for both the amplitude envelope and the filter envelope which resulted in overly dramatic sounds each time release was triggered. As a result I ended up scrapping Tone.MonoSynth() and using the simpler Tone.Synth() instead. Instead of using release, I use a long decay and low sustain so that the fadein and fadeout happens largely before the synth disappears.

Future Ideas

- Autoplay Mode - the computer generates new notes on its own. This way I could practice guitar while computer drones accompany me automatically. How often these intervals are triggered would be fun to explore perhaps through different programmed behavior types.

- Multi-button Input Mode - The user could specify how high a frequency they want to hear by where along the keyboard (left to right) they press. This could allow extra control in how a drone progresses from low to high over time and the user ensuring they have a diverse frequency space when they want it.

- Graphics Enhancements - I really wanted to create depth with the pitch lines. Slower moving lines would be darker and perhaps a little smaller. This would involve sorting the array of lines (or adding a new line to the right position) to make sure that they are drawn in the correct order. It would not take too long for me to implement, but I am out of time. Beyond this, I should explore what other musical features, frequency especially, can be represented visually.

Play it. (Supported by Chrome.)

A blog for displaying Willie Payne’s progress in the NYU ITP Course “The Code of Music.”